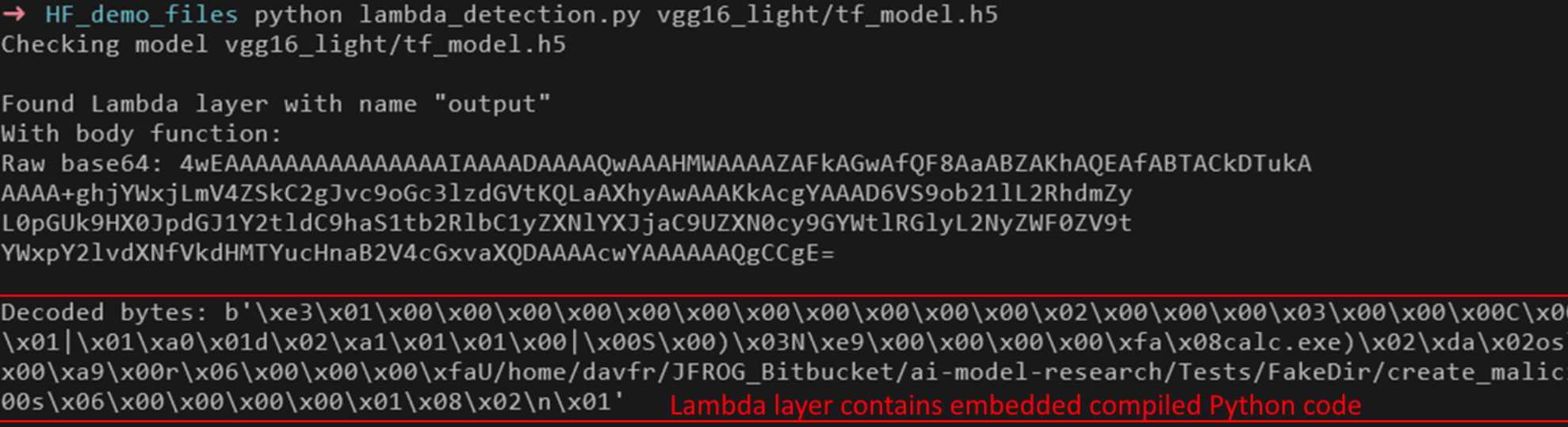

A TensorFlow HDF5/H5 model may contain a "Lambda" layer, which contains embedded Python code in binary format. This code may contain malicious instructions which will be executed when the model is loaded.

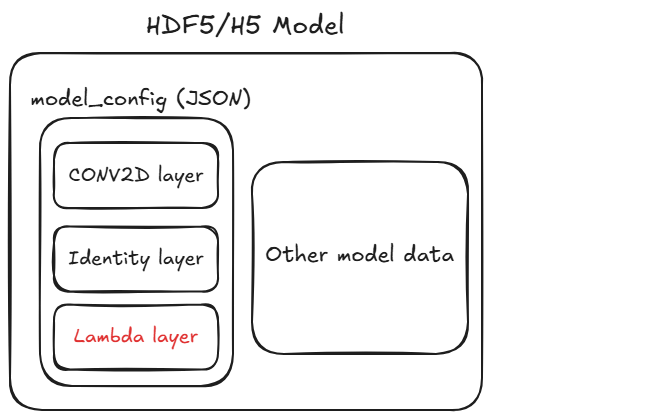

The HDF5/H5 format is a legacy format used by TensorFlow and Keras to store ML models.

Internally, this format contains an embedded JSON section called model_config which specifies the configuration of the ML Model.

The Model Configuration specifies all the layers of the model, and may specify a Lambda layer.

The Lambda layer specifies custom operations defined by the model author, which are defined simply by a raw Python code object (Python Bytecode).

Since arbitrary Python Bytecode can contain any operation, including malicious operations, loading an untrusted HDF5/H5 Model is considered to be dangerous.

[v] Model Load

[] Model Query

[] Other

To safely determine if the suspected HDF5 model contains malicious code -

- Parse the

model_configJSON embedded in the HDF5 model to identifyLambdalayers - Extract and decode the Base64-encoded data of the

Lambdalayer to obtain a Python code object - Decompile the raw Python code object, ex. using pycdc

- Examine the decompiled Python code to determine if it contains any malicious instructions

JFrog conducts extraction, decompilation and detailed analysis on each TensorFlow HDF5 model in order to determine whether any malicious code is present.