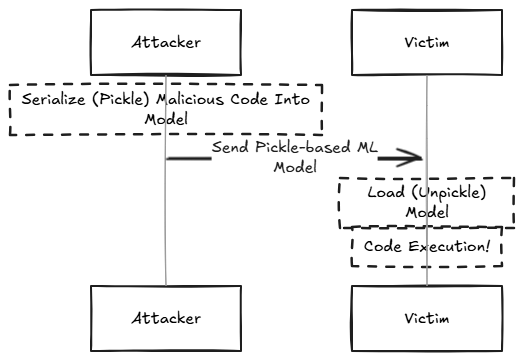

A Pickle-based model contains serialized Pickle data which may cause execution of malicious Python code when the model is loaded, using the getattr function.

Many ML model formats such as PyTorch, JobLib, NumPy and more, use Python's Pickle serialization format as part of their internal storage.

The Pickle format is well-known to be a dangerous serialization format, since in addition to serialized data, it may contain serialized code which will be automatically executed when the Pickled/Serialized file is loaded.

Specifically - the potentially malicious Python code may contain a reference to the getattr function, which is considered a malicious function by many ML model scanners.

While getattr is a basic method used in many legitimate codebases, it can be abused in order to run malicious code.

[v] Model Load

[] Model Query

[] Other

Many legitimate ML libraries such as fastai use getattr for valid reasons when training an ML model.

In many deep learning frameworks, especially in object detection models, the getattr function is commonly used in model definitions. It dynamically retrieves methods or attributes based on their names, allowing for greater flexibility in execution.

However, getattr can be abused for malicious purposes. For example -

def process_data(user_input):

dangerous_func = getattr(os, 'system')

dangerous_func(f'echo Processing {user_input}')

user_controlled_input = "data; rm -rf ~/*"

process_data(user_controlled_input)

In this example, getattr() allows for dynamically calling the os.system method in a stealthy manner, that leads to the execution of a destructive shell command.

To safely determine if the getattr use is benign:

- Examine the specific parameters passed to

getattr - Verify the source and context of attribute access

- Confirm the object and attribute namespaces are controlled and trusted

- Validate that the retrieved attributes are limited to expected, safe operations

JFrog conducts a detailed parameter analysis to determine whether getattr is used maliciously, by:

- Confirming the exact attributes being accessed

- Verifying no unexpected or dangerous method calls are used

- Ruling out potential arbitrary code execution scenarios

- Classifying the

getattrusage as safe if it meets the above safety criteria

This systematic approach transforms an initial indication of "potential security concern" to a validated safe model through deep contextual examination.