Defending your organization against the "Shai-Hulud" worm and its evolved variant, "Sha1-Hulud the second coming", requires immediate action and a systematic approach. This highly efficient, GitHub-weaponized exfiltration attack has compromised npm supply chains worldwide. When defending against an infected system, understanding that the malware leverages stolen tokens to create public repositories - typically identifiable by a random name and the description "Sha1-Hulud: The Second Coming" - is critical to protecting your credentials from exfiltration.

Finding this repository means an active breach is in progress. Defending your infrastructure requires swift, systematic action following a strict order of operations to contain the leak and prevent further damage.

If you've discovered a Shai-Hulud compromise, take these immediate defensive actions:

- DO NOT DELETE the malicious GitHub repository - you need it for forensics

- Immediately privatize the repository to stop credential exposure

- Disable malicious workflows(GitHub Action) and remove self-hosted runners named "SHA1HULUD"

- Remove infected packages from node_modules and clear npm cache

- Rotate ALL credentials found in the exfiltration repository

- Audit access logs for unauthorized OAuth apps and persistence mechanisms

⚠️ Critical: Complete steps 1-4 BEFORE rotating credentials, or the malware will steal your new keys.

Defending against Shai-Hulud requires a multi-layered protection approach:

Immediate Defense:

- Repository Containment: Privatize malicious repositories to cut off attacker access

- Malware Removal: Eliminate infected packages before credential rotation

- Access Revocation: Disable compromised runners and workflows

Credential Protection:

- Complete Rotation: Replace all GitHub tokens, npm credentials, and cloud keys

- Scope Reduction: Minimize permissions on newly generated credentials

- Multi-Factor Authentication: Enforce MFA on all developer and service accounts

Long-Term Prevention:

- Package Curation: Implement dependency scanning and approval workflows

- Immaturity Policies: Block newly published package versions automatically

- Continuous Monitoring: Set up alerts for suspicious repository creation and unusual package installations

The following sections provide detailed step-by-step instructions for defending your organization against this sophisticated supply chain attack.

Before continuing: DO NOT DELETE the GitHub repository that was created by Shai Hulud. Follow the guidance below.

The Shai-Hulud worm’s objective is to extract high-value credentials that facilitate lateral movement and further supply chain attacks. It achieves this by aggressively scanning the compromised developer environment. Using a stolen Developer GitHub Personal Access Token (PAT), the malware programmatically creates the exfiltration repository and commits files that categorize the stolen data.

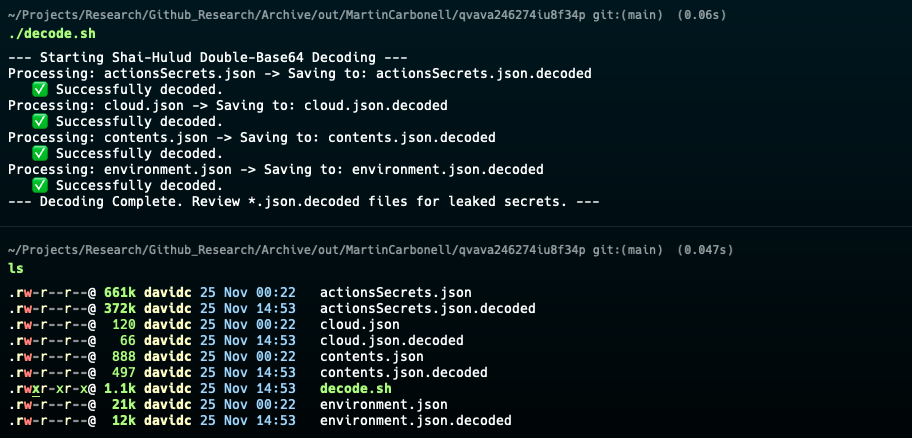

The malware commits multiple .json files, all containing JSON objects that have been subjected to double Base64 encoding to provide a basic layer of obfuscation against simple secret scans:

actionsSecrets.json: This file contains credentials specifically designed for the GitHub Actions environment. These secrets are often non-personal service tokens with broad permissions, making them extremely valuable to the attacker for automating malicious deployments or accessing corporate infrastructure.

View actionsSecrets.json example

{

"AWS_ACCESS_KEY_ID_SDK": "AKIA****KOI",

"AWS_SECRET_ACCESS_KEY_DEV": "MGcv*****bUR",

"GH_BOT_TOKEN": "ghp_*******8O8",

"NPM_AUTH_TOKEN": "NpmToken.ff*******a86",

"SECRET_KEY": "0eee*****a59",

"COCOAPODS_TRUNK_TOKEN": "8efb*****d607",

"TOKEN_ARTIFACTORY": "eyJ2*****SldU.eyJ*****ZGE2.YV7u*****oSQ",

"PHUB_EVIDENCER_SECRET_KEY_PRO": "HgV*****pU=",

"TENANT_ID": "6f2b*****94a9",

"CLIENT_SELPHID_SECRET": "VOph*****flJ",

"github_token": "ghs_7Q******sN",

"EXPO_TOKEN": "i67u****teI-",

"BROWSERSTACK_ACCESS_KEY": "N86j****nQzv",

"CLIENTSECRET_PRO": "bta****2RVr0"

}

contents.json: The primary file containing the victim's own GitHub token that was used to create the repository, along with initial system and account information.

View contents.json example

{

"system": {

"platform": "darwin",

"architecture": "arm64",

"platformDetailed": "darwin",

"architectureDetailed": "arm64",

"hostname": "LT310",

"os_user": {

"homedir": "/Users/********",

"username": "********",

"shell": "/bin/zsh",

"uid": 502,

"gid": 20

}

},

"modules": {

"github": {

"authenticated": true,

"token": "ghp_bIQGBY8TW*************lGG2Z1d3rLz",

"username": {

"login": "********",

"name": "M******",

"email": null,

"publicRepos": 6,

"followers": 0,

"following": 0,

"createdAt": "2022-10-04T08:23:41Z"

}

}

}

}

cloud.json: Contains high-priority, high-risk credentials, specifically AWS, Azure, and Google Cloud Platform (GCP) access keys/secrets.

View cloud.json example

{

"aws": {

"secrets": [

{

"name": "AWS_ACCESS_KEY_ID",

"value": "AKIAIOSFODNN7EXAMPLE"

},

{

"name": "AWS_SECRET_ACCESS_KEY",

"value": "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY"

},

{

"name": "AWS_SESSION_TOKEN",

"value": "FwoGZXIvYXdzEBYaDEXAMPLETOKEN1234567890abcdefg"

}

]

},

"gcp": {

"secrets": [

{

"name": "GCP_SERVICE_ACCOUNT_KEY",

"value": "eyJ0eXBlIjoic2VydmljZV9hY2NvdW50IiwiY2xpZW50X2VtYWlsIjoiZXhhbXBsZUBwcm9qZWN0LmlhbS5nc2VydmljZWFjY291bnQuY29tIn0="

},

{

"name": "GCP_PROJECT_ID",

"value": "example-project-123456"

}

]

},

"azure": {

"secrets": [

{

"name": "AZURE_CLIENT_ID",

"value": "a1b2c3d4-e5f6-7890-abcd-ef1234567890"

},

{

"name": "AZURE_CLIENT_SECRET",

"value": "Abc8Q~EXAMPLE_SECRET_VALUE_1234567890abcdef"

},

{

"name": "AZURE_TENANT_ID",

"value": "98765432-abcd-efgh-ijkl-1234567890ab"

}

]

}

}

environment.json: A complete dump of the compromised system's environment variables (process.env), often revealing sensitive API keys, database connection strings, and internal service credentials.

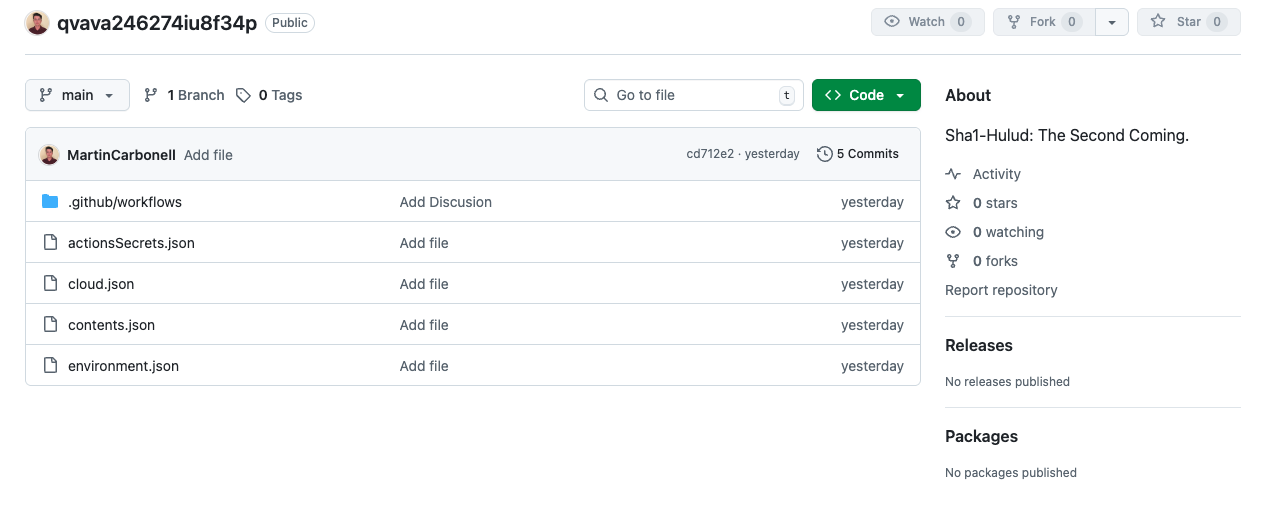

Example of GitHub repository created by Sha1-Hulud (2nd campaign) malware

Example of GitHub repository created by Sha1-Hulud (2nd campaign) malware

truffleSecrets.json: Credentials found by an aggressive file system scan using an embedded version of the open-source tool, TruffleHog.

View truffleSecrets.json example

{

"findings": [

{

"SourceMetadata": {

"Data": {

"Filesystem": {

"file": "/home/runner/work/my-project/.git/config",

"line": 12

}

}

},

"SourceName": "trufflehog - filesystem",

"DetectorType": 8,

"DetectorName": "Github",

"DetectorDescription": "GitHub Personal Access Token",

"Verified": true,

"Raw": "ghp_a1B2c3D4e5F6g7H8i9J0k1L2m3N4o5P6q7R8",

"ExtraData": {

"rotation_guide": "https://howtorotate.com/docs/tutorials/github/"

}

},

{

"SourceMetadata": {

"Data": {

"Filesystem": {

"file": "/home/runner/work/my-project/config/credentials.json",

"line": 5

}

}

},

"SourceName": "trufflehog - filesystem",

"DetectorType": 1039,

"DetectorName": "JWT",

"DetectorDescription": "JSON Web Token",

"Verified": true,

"Raw": "eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiYWRtaW4iOnRydWUsImlhdCI6MTUxNjIzOTAyMn0.POstGetfAytaZS82wHcjoTyoqhMyxXiWdR7Nn7A29DNSl0EiXLdwJ6xC6AfgZWF1bOsS_TuYI3OG85AmiExREkrS6tDfTQ2B3WXlrr-wp5AokiRbz3_oB4OxG-W9KcEEbDRcZc0nH3L7LzYptiy1PtAylQGxHTWZXtGz4ht0bAecBgmpdgXMguEIcoqPJ1n3pIWk_dUZegpqx0Lka21H6XxUTxiy8OcaarA8zdnPUnV6AmNP3ecFawIFYdvJB_cm-GvpCSbr8G8y_Mllj8f4x9nBH8pQux89_6gUY618iYv7tuPWBFfEbLxtF2pZS6YC1aSfLQxeNe8djT9YjpvRZA",

"ExtraData": {

"alg": "RS256",

"exp": "2025-12-01 00:00:00 +0000 UTC",

"iss": "https://auth.example.com"

}

},

{

"SourceMetadata": {

"Data": {

"Filesystem": {

"file": "/home/runner/work/my-project/scripts/deploy.sh",

"line": 23

}

}

},

"SourceName": "trufflehog - filesystem",

"DetectorType": 15,

"DetectorName": "PrivateKey",

"DetectorDescription": "RSA Private Key",

"Verified": false,

"Raw": "-----BEGIN RSA PRIVATE KEY-----\nMIIEpAIBAAKCAQEA2Z3qX2BTLS4e....[TRUNCATED]....\n-----END RSA PRIVATE KEY-----",

"Redacted": "-----BEGIN RSA PRIVATE KEY-----\nMIIEpAIBAAKCAQEA2Z3qX2BTLS4e"

},

{

"SourceMetadata": {

"Data": {

"Filesystem": {

"file": "/home/runner/work/my-project/.env",

"line": 8

}

}

},

"SourceName": "trufflehog - filesystem",

"DetectorType": 17,

"DetectorName": "URI",

"DetectorDescription": "URL with embedded credentials",

"Verified": false,

"Raw": "postgresql://admin:SuperSecret123@db.internal.example.com:5432/production",

"Redacted": "postgresql://admin:********@db.internal.example.com:5432/production"

},

{

"SourceMetadata": {

"Data": {

"Filesystem": {

"file": "/home/runner/work/my-project/src/services/aws.js",

"line": 15

}

}

},

"SourceName": "trufflehog - filesystem",

"DetectorType": 1,

"DetectorName": "AWS",

"DetectorDescription": "AWS Access Key",

"Verified": true,

"Raw": "AKIAIOSFODNN7EXAMPLE",

"RawV2": "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY"

}

],

"executionTime": 45230,

"exitCode": 0,

"verified_secrets": 3,

"unverified_secrets": 2

}

The Shai-Hulud (1st) and Sha1-Hulud (2nd) campaigns specifically target GitHub Actions environments with two distinct, high-impact mechanisms:

- Secret Exfiltration via Malicious Workflow: The malware pushes a temporary, malicious workflow (e.g.,

.github/workflows/formatter_123456789.ymlordiscussion.yaml) to compromise repositories. This workflow's sole purpose is to list and collect all secrets defined in the repository's GitHub Secrets section and upload them as theactionsSecrets.jsonfile to the exfiltration repo. - Persistence via Self-Hosted Runner: The malware registers the infected machine (if it is a CI runner or has the necessary permissions) as a self-hosted runner named something identifiable like

SHA1HULUD, which allows the attacker continuous, remote access to the organization's network and resources.

This structured dump provides the attacker with a complete dossier for continued operation.

- Action: DO NOT DELETE THE GITHUB REPOSITORY. Instead, immediately navigate to the suspicious GitHub repository and change its visibility from Public to Private in the settings. *In case you are not able to change the visibility, clone the repository and then delete it*.

- Why: This instantly terminates public access to the data, regardless of the encoding. The repository should not be deleted since it is your primary forensic evidence.

This step is mandatory before rotating credentials. If you rotate keys while the malware is still present, it will simply steal the new keys.

- Action: Navigate to your GitHub Settings (or Organization/Enterprise Settings) → Actions → Runners.

- Find and immediately disable or remove any self-hosted runner named

SHA1HULUDor any other unrecognized runner added recently. - Why: This eliminates the attacker's persistent backdoor access to your build network.

- Check compromised repositories for new files in the

.github/workflows/directory (e.g.,formatter_*.yml). Revert/Delete these malicious workflow files.

- Remove: Completely delete the project's dependency artifacts locally (

rm -rf node_modules) and clear the local cache (npm cache clean --force). - Reinstall Safely: Update your

package.jsonto replace the malicious package’s version (https://research.jfrog.com/post/shai-hulud-the-second-coming/) with a known, safe version, then re-install the package locally (npm ci). - Re-publish package: If you are a package maintainer and a malicious package was released under your name, ensure you re-publish the package with a new, safe release.

- Identify: Use the detection script below to look on disk for a malicious package or a malicious indicators (Credit: Cobenian/shai-hulud-detect).

Security Note: We have embedded the full source code below to ensure the integrity and availability of the detection tool. Mirroring the script here eliminates dependencies on external repositories that could potentially be modified or compromised in the future, guaranteeing a safe and verifiable version for your incident response.

Click to view shai-hulud-detector.sh

#!/usr/bin/env bash

# Shai-Hulud NPM Supply Chain Attack Detection Script

# Detects indicators of compromise from September 2025 and November 2025 npm attacks

# Includes detection for "Shai-Hulud: The Second Coming" (fake Bun runtime attack)

# Usage: ./shai-hulud-detector.sh <directory_to_scan>

#

# Requires: Bash 5.0+

# Require Bash 5.0+ for associative arrays, mapfile, and modern features

if [[ -z "${BASH_VERSINFO[0]}" ]] || [[ "${BASH_VERSINFO[0]}" -lt 5 ]]; then

echo "ERROR: Shai-Hulud Detector requires Bash 5.0 or newer."

echo "You appear to be running: ${BASH_VERSION:-unknown}."

echo

echo "macOS: brew install bash && run with: /opt/homebrew/bin/bash $0 ..."

echo "Linux: install a current bash via your package manager (bash 5.x is standard on modern distros)."

exit 1

fi

set -eo pipefail

# Script directory for locating companion files (compromised-packages.txt)

SCRIPT_DIR="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)"

# Global temp directory for file-based storage

TEMP_DIR=""

# Global variables for risk tracking (used for exit codes)

high_risk=0

medium_risk=0

# Function: create_temp_dir

# Purpose: Create cross-platform temporary directory for findings storage

# Args: None

# Modifies: TEMP_DIR (global variable)

# Returns: 0 on success, exits on failure

create_temp_dir() {

local temp_base="${TMPDIR:-${TMP:-${TEMP:-/tmp}}}"

if command -v mktemp >/dev/null 2>&1; then

# Try mktemp with our preferred pattern

TEMP_DIR=$(mktemp -d -t shai-hulud-detect-XXXXXX 2>/dev/null || true) || \

TEMP_DIR=$(mktemp -d 2>/dev/null || true) || \

TEMP_DIR="$temp_base/shai-hulud-detect-$$-$(date +%s)"

else

# Fallback for systems without mktemp (rare with bash)

TEMP_DIR="$temp_base/shai-hulud-detect-$$-$(date +%s)"

fi

mkdir -p "$TEMP_DIR" || {

echo "Error: Cannot create temporary directory"

exit 1

}

# Create findings files

touch "$TEMP_DIR/workflow_files.txt"

touch "$TEMP_DIR/malicious_hashes.txt"

touch "$TEMP_DIR/compromised_found.txt"

touch "$TEMP_DIR/suspicious_found.txt"

touch "$TEMP_DIR/suspicious_content.txt"

touch "$TEMP_DIR/crypto_patterns.txt"

touch "$TEMP_DIR/git_branches.txt"

touch "$TEMP_DIR/postinstall_hooks.txt"

touch "$TEMP_DIR/trufflehog_activity.txt"

touch "$TEMP_DIR/shai_hulud_repos.txt"

touch "$TEMP_DIR/namespace_warnings.txt"

touch "$TEMP_DIR/low_risk_findings.txt"

touch "$TEMP_DIR/integrity_issues.txt"

touch "$TEMP_DIR/typosquatting_warnings.txt"

touch "$TEMP_DIR/network_exfiltration_warnings.txt"

touch "$TEMP_DIR/lockfile_safe_versions.txt"

touch "$TEMP_DIR/bun_setup_files.txt"

touch "$TEMP_DIR/bun_environment_files.txt"

touch "$TEMP_DIR/new_workflow_files.txt"

touch "$TEMP_DIR/github_sha1hulud_runners.txt"

touch "$TEMP_DIR/preinstall_bun_patterns.txt"

touch "$TEMP_DIR/second_coming_repos.txt"

touch "$TEMP_DIR/actions_secrets_files.txt"

touch "$TEMP_DIR/discussion_workflows.txt"

touch "$TEMP_DIR/github_runners.txt"

touch "$TEMP_DIR/malicious_hashes.txt"

touch "$TEMP_DIR/destructive_patterns.txt"

touch "$TEMP_DIR/trufflehog_patterns.txt"

}

# Function: cleanup_temp_files

# Purpose: Clean up temporary directory on script exit, interrupt, or termination

# Args: None (uses $? for exit code)

# Modifies: Removes temp directory and all contents

# Returns: Exits with original script exit code

cleanup_temp_files() {

local exit_code=$?

if [[ -n "$TEMP_DIR" && -d "$TEMP_DIR" ]]; then

rm -rf "$TEMP_DIR"

fi

exit $exit_code

}

# Set trap for cleanup on exit, interrupt, or termination

trap cleanup_temp_files EXIT INT TERM

# Color codes for output

RED='\033[0;31m'

YELLOW='\033[1;33m'

GREEN='\033[0;32m'

BLUE='\033[0;34m'

ORANGE='\033[38;5;172m' # Muted orange for stage headers (256-color mode)

NC='\033[0m' # No Color

# Known malicious file hashed (source: https://socket.dev/blog/ongoing-supply-chain-attack-targets-crowdstrike-npm-packages)

MALICIOUS_HASHLIST=(

"de0e25a3e6c1e1e5998b306b7141b3dc4c0088da9d7bb47c1c00c91e6e4f85d6"

"81d2a004a1bca6ef87a1caf7d0e0b355ad1764238e40ff6d1b1cb77ad4f595c3"

"83a650ce44b2a9854802a7fb4c202877815274c129af49e6c2d1d5d5d55c501e"

"4b2399646573bb737c4969563303d8ee2e9ddbd1b271f1ca9e35ea78062538db"

"dc67467a39b70d1cd4c1f7f7a459b35058163592f4a9e8fb4dffcbba98ef210c"

"46faab8ab153fae6e80e7cca38eab363075bb524edd79e42269217a083628f09"

"b74caeaa75e077c99f7d44f46daaf9796a3be43ecf24f2a1fd381844669da777"

"86532ed94c5804e1ca32fa67257e1bb9de628e3e48a1f56e67042dc055effb5b" # test-cases/multi-hash-detection/file1.js

"aba1fcbd15c6ba6d9b96e34cec287660fff4a31632bf76f2a766c499f55ca1ee" # test-cases/multi-hash-detection/file2.js

)

PARALLELISM=4

if [[ "$OSTYPE" == "linux-gnu"* ]]; then

PARALLELISM=$(nproc)

elif [[ "$OSTYPE" == "darwin"* ]]; then

PARALLELISM=$(sysctl -n hw.ncpu)

fi

# Timing variables

SCAN_START_TIME=0

# Function: get_elapsed_time

# Purpose: Get elapsed time since scan start in seconds

# Returns: Time in format "X.XXXs"

get_elapsed_time() {

local now=$(date +%s%N 2>/dev/null || echo "$(date +%s)000000000")

local elapsed_ns=$((now - SCAN_START_TIME))

local elapsed_s=$((elapsed_ns / 1000000000))

local elapsed_ms=$(((elapsed_ns % 1000000000) / 1000000))

printf "%d.%03ds" "$elapsed_s" "$elapsed_ms"

}

# Function: print_stage_complete

# Purpose: Print stage completion with elapsed time

# Args: $1 = stage name

print_stage_complete() {

local stage_name=$1

local elapsed=$(get_elapsed_time)

print_status "$BLUE" " $stage_name completed [$elapsed]"

}

# Associative arrays for O(1) lookups (Bash 5.0+ feature)

declare -A COMPROMISED_PACKAGES_MAP # "package:version" -> 1

declare -A COMPROMISED_NAMESPACES_MAP # "@namespace" -> 1

# Function: load_compromised_packages

# Purpose: Load compromised package database from external file or fallback list

# Args: None (reads from compromised-packages.txt in script directory)

# Modifies: COMPROMISED_PACKAGES_MAP (global associative array)

# Returns: Populates COMPROMISED_PACKAGES_MAP for O(1) lookups

load_compromised_packages() {

local packages_file="$SCRIPT_DIR/compromised-packages.txt"

local count=0

if [[ -f "$packages_file" ]]; then

# Use mapfile to read all valid lines at once, then populate associative array

local -a raw_packages

mapfile -t raw_packages < <(

grep -v '^[[:space:]]*#' "$packages_file" | \

grep -E '^[a-zA-Z@][^:]+:[0-9]+\.[0-9]+\.[0-9]+' | \

tr -d $'\r'

)

# Populate associative array for O(1) lookups

for pkg in "${raw_packages[@]}"; do

COMPROMISED_PACKAGES_MAP["$pkg"]=1

((count++)) || true # Prevent errexit when count starts at 0

done

print_status "$BLUE" "📦 Loaded $count compromised packages from $packages_file (O(1) lookup enabled)"

else

# Fallback to embedded list if file not found

print_status "$YELLOW" "⚠️ Warning: $packages_file not found, using embedded package list"

local fallback_packages=(

"@ctrl/tinycolor:4.1.0"

"@ctrl/tinycolor:4.1.1"

"@ctrl/tinycolor:4.1.2"

"@ctrl/deluge:1.2.0"

"angulartics2:14.1.2"

"koa2-swagger-ui:5.11.1"

"koa2-swagger-ui:5.11.2"

)

for pkg in "${fallback_packages[@]}"; do

COMPROMISED_PACKAGES_MAP["$pkg"]=1

done

fi

}

# Known compromised namespaces - packages in these namespaces may be compromised

# Stored in both array (for iteration) and associative array (for O(1) lookup)

COMPROMISED_NAMESPACES=(

"@crowdstrike"

"@art-ws"

"@ngx"

"@ctrl"

"@nativescript-community"

"@ahmedhfarag"

"@operato"

"@teselagen"

"@things-factory"

"@hestjs"

"@nstudio"

"@basic-ui-components-stc"

"@nexe"

"@thangved"

"@tnf-dev"

"@ui-ux-gang"

"@yoobic"

)

# Populate namespace associative array for O(1) lookups

for ns in "${COMPROMISED_NAMESPACES[@]}"; do

COMPROMISED_NAMESPACES_MAP["$ns"]=1

done

# Function: is_compromised_package

# Purpose: O(1) lookup to check if a package:version is compromised

# Args: $1 = package:version string

# Returns: 0 if compromised, 1 if not

is_compromised_package() {

[[ -v COMPROMISED_PACKAGES_MAP["$1"] ]]

}

# Function: is_compromised_namespace

# Purpose: O(1) lookup to check if a namespace is compromised

# Args: $1 = @namespace string

# Returns: 0 if compromised, 1 if not

is_compromised_namespace() {

[[ -v COMPROMISED_NAMESPACES_MAP["$1"] ]]

}

# Function: cleanup_and_exit

# Purpose: Clean up background processes and temp files when script is interrupted

# Args: None

# Modifies: Kills all background jobs, removes temp files

# Returns: Exits with code 130 (standard for Ctrl-C interruption)

cleanup_and_exit() {

print_status "$YELLOW" "🛑 Scan interrupted by user. Cleaning up..."

# Kill all background jobs (more portable approach)

local job_pids

job_pids=$(jobs -p 2>/dev/null || true)

if [[ -n "$job_pids" ]]; then

echo "$job_pids" | while read -r pid; do

[[ -n "$pid" ]] && kill "$pid" 2>/dev/null || true

done

# Wait a moment for jobs to terminate

sleep 0.5

# Force kill any remaining processes

echo "$job_pids" | while read -r pid; do

[[ -n "$pid" ]] && kill -9 "$pid" 2>/dev/null || true

done

fi

# Clean up temp directory

if [[ -n "$TEMP_DIR" && -d "$TEMP_DIR" ]]; then

rm -rf "$TEMP_DIR"

fi

print_status "$NC" "Cleanup complete. Exiting."

exit 130

}

# Phase 2: Bash 3.x Compatible In-Memory Caching System

# Uses temp files in memory (tmpfs) for compatibility with older Bash versions

# Function: get_cached_file_hash

# Purpose: Get cached SHA256 hash using tmpfs for near-memory speed

# Args: $1 = file_path (absolute path to file)

# Modifies: Creates small cache files in TEMP_DIR for reuse

# Returns: Echoes SHA256 hash of file

get_cached_file_hash() {

local file_path="$1"

# Create cache key from file path, size, and modification time

local file_size file_mtime cache_key hash_cache_file

file_size=$(stat -f%z "$file_path" 2>/dev/null || stat -c%s "$file_path" 2>/dev/null || echo "0")

file_mtime=$(stat -f%m "$file_path" 2>/dev/null || stat -c%Y "$file_path" 2>/dev/null || echo "0")

cache_key=$(echo "${file_path}:${file_size}:${file_mtime}" | shasum 2>/dev/null | cut -d' ' -f1 || echo "${file_path//\//_}_${file_size}_${file_mtime}")

hash_cache_file="$TEMP_DIR/hcache_$cache_key"

# Check cache first - small file reads are very fast

if [[ -f "$hash_cache_file" ]]; then

cat "$hash_cache_file"

return 0

fi

# Calculate hash and store in cache

local file_hash=""

if command -v sha256sum >/dev/null 2>&1; then

file_hash=$(sha256sum "$file_path" 2>/dev/null | cut -d' ' -f1)

elif command -v shasum >/dev/null 2>&1; then

file_hash=$(shasum -a 256 "$file_path" 2>/dev/null | cut -d' ' -f1)

fi

# Store in cache for future lookups

if [[ -n "$file_hash" ]]; then

echo "$file_hash" > "$hash_cache_file"

echo "$file_hash"

fi

}

# Function: get_cached_package_dependencies

# Purpose: Get cached package dependencies using tmpfs storage

# Args: $1 = package_file (path to package.json)

# Modifies: Creates cache files in TEMP_DIR

# Returns: Echoes package dependencies in name:version format

get_cached_package_dependencies() {

local package_file="$1"

# Create cache key from file path, size, and modification time

local file_size file_mtime cache_key deps_cache_file

file_size=$(stat -f%z "$package_file" 2>/dev/null || stat -c%s "$package_file" 2>/dev/null || echo "0")

file_mtime=$(stat -f%m "$package_file" 2>/dev/null || stat -c%Y "$package_file" 2>/dev/null || echo "0")

cache_key=$(echo "${package_file}:${file_size}:${file_mtime}" | shasum 2>/dev/null | cut -d' ' -f1 || echo "${package_file//\//_}_${file_size}_${file_mtime}")

deps_cache_file="$TEMP_DIR/dcache_$cache_key"

# Check cache first

if [[ -f "$deps_cache_file" ]]; then

cat "$deps_cache_file"

return 0

fi

# Extract dependencies and store in cache

local deps_output

deps_output=$(awk '/"dependencies":|"devDependencies":/{flag=1;next}/}/{flag=0}flag' "$package_file" 2>/dev/null || true)

if [[ -n "$deps_output" ]]; then

echo "$deps_output" > "$deps_cache_file"

echo "$deps_output"

fi

}

# File-based storage for findings (replaces global arrays for memory efficiency)

# Files created in create_temp_dir() function:

# - workflow_files.txt, malicious_hashes.txt, compromised_found.txt

# - suspicious_found.txt, suspicious_content.txt, crypto_patterns.txt

# - git_branches.txt, postinstall_hooks.txt, trufflehog_activity.txt

# - shai_hulud_repos.txt, namespace_warnings.txt, low_risk_findings.txt

# - integrity_issues.txt, typosquatting_warnings.txt, network_exfiltration_warnings.txt

# - lockfile_safe_versions.txt, bun_setup_files.txt, bun_environment_files.txt

# - new_workflow_files.txt, github_sha1hulud_runners.txt, preinstall_bun_patterns.txt

# - second_coming_repos.txt, actions_secrets_files.txt, trufflehog_patterns.txt

# Function: usage

# Purpose: Display help message and exit

# Args: None

# Modifies: None

# Returns: Exits with code 1

usage() {

echo "Usage: $0 [--paranoid] [--parallelism N] <directory_to_scan>"

echo

echo "OPTIONS:"

echo " --paranoid Enable additional security checks (typosquatting, network patterns)"

echo " These are general security features, not specific to Shai-Hulud"

echo " --parallelism N Set the number of threads to use for parallelized steps (current: ${PARALLELISM})"

echo ""

echo "EXAMPLES:"

echo " $0 /path/to/your/project # Core Shai-Hulud detection only"

echo " $0 --paranoid /path/to/your/project # Core + advanced security checks"

exit 1

}

# Function: print_status

# Purpose: Print colored status messages to console

# Args: $1 = color code (RED, YELLOW, GREEN, BLUE, NC), $2 = message text

# Modifies: None (outputs to stdout)

# Returns: Prints colored message

print_status() {

local color=$1

local message=$2

echo -e "${color}${message}${NC}"

}

# Function: show_file_preview

# Purpose: Display file context for HIGH RISK findings only

# Args: $1 = file_path, $2 = context description

# Modifies: None (outputs to stdout)

# Returns: Prints formatted file preview box for HIGH RISK items only

show_file_preview() {

local file_path=$1

local context="$2"

# Only show file preview for HIGH RISK items to reduce noise

if [[ "$context" == *"HIGH RISK"* ]]; then

echo -e " ${BLUE}┌─ File: $file_path${NC}"

echo -e " ${BLUE}│ Context: $context${NC}"

echo -e " ${BLUE}└─${NC}"

echo

fi

}

# Function: show_progress

# Purpose: Display real-time progress indicator for file scanning operations

# Args: $1 = current files processed, $2 = total files to process

# Modifies: None (outputs to stderr with ANSI escape codes)

# Returns: Prints "X / Y checked (Z %)" with line clearing

show_progress() {

local current=$1

local total=$2

local percent=0

[[ $total -gt 0 ]] && percent=$((current * 100 / total))

echo -ne "\r\033[K$current / $total checked ($percent %)"

}

# Function: count_files

# Purpose: Count files matching find criteria, returns clean integer

# Args: All arguments passed to find command (e.g., path, -name, -type)

# Modifies: None

# Returns: Integer count of matching files (strips whitespace)

count_files() {

(find "$@" 2>/dev/null || true) | wc -l | tr -d ' '

}

# Function: collect_all_files

# Purpose: Single comprehensive file collection to replace 20+ separate find operations

# Args: $1 = scan_dir (directory to scan)

# Modifies: Creates categorized temp files for all functions to use

# Returns: Populates temp files with file paths by category

collect_all_files() {

local scan_dir="$1"

# Ensure temp directory exists

[[ -d "$TEMP_DIR" ]] || mkdir -p "$TEMP_DIR"

# Single comprehensive find operation for all file types needed (silent)

{

find "$scan_dir" \( \

-name "*.js" -o -name "*.ts" -o -name "*.json" -o -name "*.mjs" -o \

-name "*.yml" -o -name "*.yaml" -o \

-name "*.py" -o -name "*.sh" -o -name "*.bat" -o -name "*.ps1" -o -name "*.cmd" -o \

-name "package.json" -o \

-name "package-lock.json" -o -name "yarn.lock" -o -name "pnpm-lock.yaml" -o \

-name "shai-hulud-workflow.yml" -o \

-name "setup_bun.js" -o -name "bun_environment.js" -o \

-name "actionsSecrets.json" -o \

-name "*trufflehog*" -o \

-name "formatter_*.yml" \

\) -type f 2>/dev/null || true

} > "$TEMP_DIR/all_files_raw.txt"

# Also collect directories in a separate operation (silent)

{

find "$scan_dir" -name ".git" -type d 2>/dev/null || true | sed 's|/.git$||'

} > "$TEMP_DIR/git_repos.txt"

{

find "$scan_dir" -type d \( -name ".dev-env" -o -name "*shai*hulud*" \) 2>/dev/null || true

} > "$TEMP_DIR/suspicious_dirs.txt"

# Categorize files for specific functions using grep (much faster than separate finds)

grep "package\.json$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/package_files.txt" 2>/dev/null || touch "$TEMP_DIR/package_files.txt"

grep "\.\(js\|ts\|json\|mjs\)$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/code_files.txt" 2>/dev/null || touch "$TEMP_DIR/code_files.txt"

grep "\.\(yml\|yaml\)$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/yaml_files.txt" 2>/dev/null || touch "$TEMP_DIR/yaml_files.txt"

grep "\.\(py\|sh\|bat\|ps1\|cmd\)$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/script_files.txt" 2>/dev/null || touch "$TEMP_DIR/script_files.txt"

grep "\(package-lock\.json\|yarn\.lock\|pnpm-lock\.yaml\)$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/lockfiles.txt" 2>/dev/null || touch "$TEMP_DIR/lockfiles.txt"

grep "shai-hulud-workflow\.yml$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/workflow_files_found.txt" 2>/dev/null || touch "$TEMP_DIR/workflow_files_found.txt"

grep "setup_bun\.js$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/setup_bun_files.txt" 2>/dev/null || touch "$TEMP_DIR/setup_bun_files.txt"

grep "bun_environment\.js$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/bun_environment_files.txt" 2>/dev/null || touch "$TEMP_DIR/bun_environment_files.txt"

grep "actionsSecrets\.json$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/actions_secrets_found.txt" 2>/dev/null || touch "$TEMP_DIR/actions_secrets_found.txt"

grep "trufflehog" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/trufflehog_files.txt" 2>/dev/null || touch "$TEMP_DIR/trufflehog_files.txt"

grep "formatter_.*\.yml$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/formatter_workflows.txt" 2>/dev/null || touch "$TEMP_DIR/formatter_workflows.txt"

# Filter GitHub workflow files specifically

grep "/.github/workflows/.*\.ya\?ml$" "$TEMP_DIR/all_files_raw.txt" > "$TEMP_DIR/github_workflows.txt" 2>/dev/null || touch "$TEMP_DIR/github_workflows.txt"

}

# Function: check_workflow_files

# Purpose: Detect malicious shai-hulud-workflow.yml files in project directories

# Args: $1 = scan_dir (directory to scan)

# Modifies: WORKFLOW_FILES (global array)

# Returns: Populates WORKFLOW_FILES array with paths to suspicious workflow files

check_workflow_files() {

local scan_dir=$1

print_status "$BLUE" " Checking for malicious workflow files..."

# Use pre-categorized files from collect_all_files (performance optimization)

while IFS= read -r file; do

if [[ -f "$file" ]]; then

echo "$file" >> "$TEMP_DIR/workflow_files.txt"

fi

done < "$TEMP_DIR/workflow_files_found.txt"

}

# Function: check_bun_attack_files

# Purpose: Detect November 2025 "Shai-Hulud: The Second Coming" Bun attack files

# Args: $1 = scan_dir (directory to scan)

# Modifies: $TEMP_DIR/bun_setup_files.txt, bun_environment_files.txt, malicious_hashes.txt

# Returns: Populates temp files with paths to suspicious Bun-related malicious files

check_bun_attack_files() {

local scan_dir=$1

print_status "$BLUE" " Checking for November 2025 Bun attack files..."

# Known malicious file hashes from Koi.ai incident report

local setup_bun_hashes=(

"a3894003ad1d293ba96d77881ccd2071446dc3f65f434669b49b3da92421901a"

)

local bun_environment_hashes=(

"62ee164b9b306250c1172583f138c9614139264f889fa99614903c12755468d0"

"f099c5d9ec417d4445a0328ac0ada9cde79fc37410914103ae9c609cbc0ee068"

"cbb9bc5a8496243e02f3cc080efbe3e4a1430ba0671f2e43a202bf45b05479cd"

)

# Look for setup_bun.js files (fake Bun runtime installation)

# Use pre-categorized files from collect_all_files (performance optimization)

if [[ -s "$TEMP_DIR/setup_bun_files.txt" ]]; then

while IFS= read -r file; do

if [[ -f "$file" ]]; then

echo "$file" >> "$TEMP_DIR/bun_setup_files.txt"

# Phase 2: Use in-memory cached hash calculation for performance

local file_hash=$(get_cached_file_hash "$file")

if [[ -n "$file_hash" ]]; then

for known_hash in "${setup_bun_hashes[@]}"; do

if [[ "$file_hash" == "$known_hash" ]]; then

echo "$file:SHA256=$file_hash (CONFIRMED MALICIOUS - Koi.ai IOC)" >> "$TEMP_DIR/malicious_hashes.txt"

break

fi

done

fi

fi

done < "$TEMP_DIR/setup_bun_files.txt"

fi

# Look for bun_environment.js files (10MB+ obfuscated payload)

# Use pre-categorized files from collect_all_files (performance optimization)

if [[ -s "$TEMP_DIR/bun_environment_files.txt" ]]; then

while IFS= read -r file; do

if [[ -f "$file" ]]; then

echo "$file" >> "$TEMP_DIR/bun_environment_files_found.txt"

# Phase 2: Use in-memory cached hash calculation for performance

local file_hash=$(get_cached_file_hash "$file")

if [[ -n "$file_hash" ]]; then

for known_hash in "${bun_environment_hashes[@]}"; do

if [[ "$file_hash" == "$known_hash" ]]; then

echo "$file:SHA256=$file_hash (CONFIRMED MALICIOUS - Koi.ai IOC)" >> "$TEMP_DIR/malicious_hashes.txt"

break

fi

done

fi

fi

done < "$TEMP_DIR/bun_environment_files.txt"

fi

}

# Function: check_new_workflow_patterns

# Purpose: Detect November 2025 new workflow file patterns and actionsSecrets.json

# Args: $1 = scan_dir (directory to scan)

# Modifies: NEW_WORKFLOW_FILES, ACTIONS_SECRETS_FILES (global arrays)

# Returns: Populates arrays with paths to new attack pattern files

check_new_workflow_patterns() {

local scan_dir=$1

print_status "$BLUE" " Checking for new workflow patterns..."

# Look for formatter_123456789.yml workflow files

# Use pre-categorized files from collect_all_files (performance optimization)

if [[ -s "$TEMP_DIR/formatter_workflows.txt" ]]; then

while IFS= read -r file; do

if [[ -f "$file" ]] && [[ "$file" == */.github/workflows/* ]]; then

echo "$file" >> "$TEMP_DIR/new_workflow_files.txt"

fi

done < "$TEMP_DIR/formatter_workflows.txt"

fi

# Look for actionsSecrets.json files (double Base64 encoded secrets)

# Use pre-categorized files from collect_all_files (performance optimization)

if [[ -s "$TEMP_DIR/actions_secrets_found.txt" ]]; then

while IFS= read -r file; do

if [[ -f "$file" ]]; then

echo "$file" >> "$TEMP_DIR/actions_secrets_files.txt"

fi

done < "$TEMP_DIR/actions_secrets_found.txt"

fi

}

# Function: check_discussion_workflows

# Purpose: Detect malicious GitHub Actions workflows with discussion triggers

# Args: $1 = scan_dir (directory to scan)

# Modifies: $TEMP_DIR/discussion_workflows.txt (temp file)

# Returns: Populates discussion_workflows.txt with paths to suspicious discussion-triggered workflows

check_discussion_workflows() {

local scan_dir=$1

print_status "$BLUE" " Checking for malicious discussion workflows..."

# Phase 3 Optimization: Batch processing with combined patterns

# Create a temporary file list for valid workflow files to process in batches

while IFS= read -r file; do

[[ -f "$file" ]] && echo "$file"

done < "$TEMP_DIR/github_workflows.txt" > "$TEMP_DIR/valid_workflows.txt"

# Check if we have any files to process

if [[ ! -s "$TEMP_DIR/valid_workflows.txt" ]]; then

return 0

fi

# Batch 1: Discussion trigger patterns (combined for efficiency)

xargs -I {} grep -l -E "on:.*discussion|on:\s*discussion" {} 2>/dev/null < "$TEMP_DIR/valid_workflows.txt" | \

while IFS= read -r file; do

echo "$file:Discussion trigger detected" >> "$TEMP_DIR/discussion_workflows.txt"

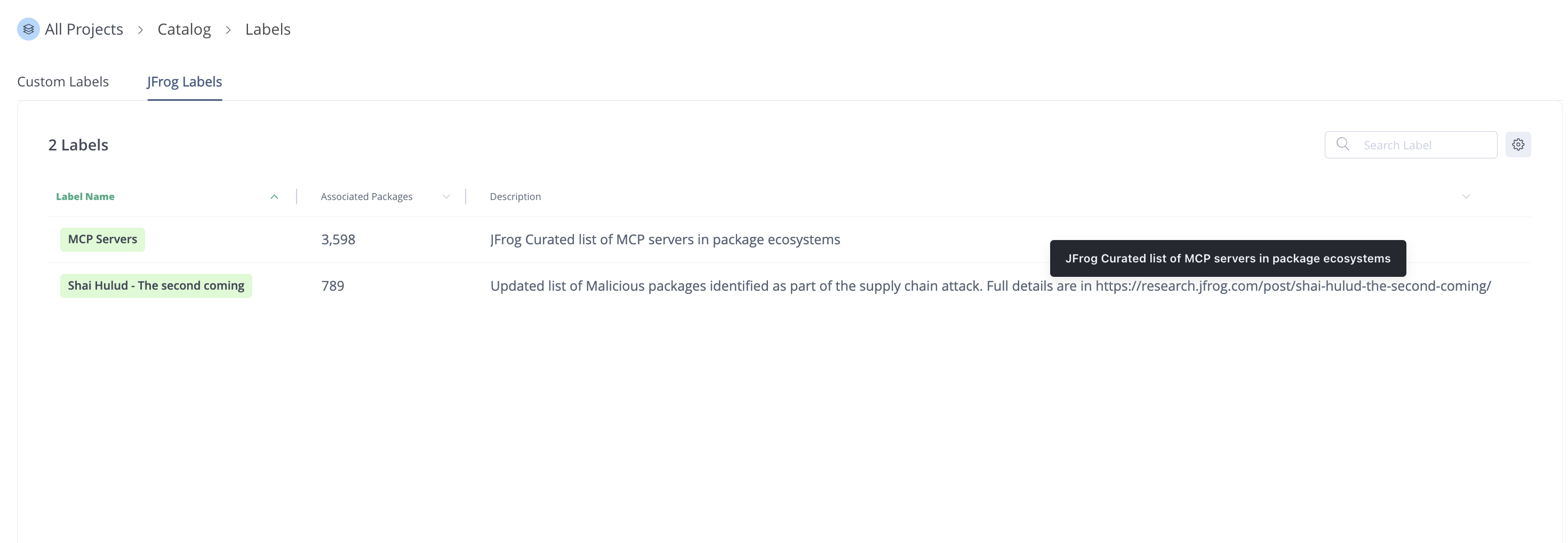

done || true

# Batch 2: Self-hosted runners with dynamic payloads (two-stage batch processing)

xargs -I {} grep -l "runs-on:.*self-hosted" {} 2>/dev/null < "$TEMP_DIR/valid_workflows.txt" | \

xargs -I {} grep -l "\${{ github\.event\..*\.body }}" {} 2>/dev/null | \

while IFS= read -r file; do

echo "$file:Self-hosted runner with dynamic payload execution" >> "$TEMP_DIR/discussion_workflows.txt"

done || true

# Batch 3: Suspicious filenames (filename-based detection)

while IFS= read -r file; do

if [[ "$(basename "$file")" == "discussion.yaml" ]] || [[ "$(basename "$file")" == "discussion.yml" ]]; then

echo "$file:Suspicious discussion workflow filename" >> "$TEMP_DIR/discussion_workflows.txt"

fi

done < "$TEMP_DIR/valid_workflows.txt"

}

# Function: check_github_runners

# Purpose: Detect self-hosted GitHub Actions runners installed by malware

# Args: $1 = scan_dir (directory to scan)

# Modifies: $TEMP_DIR/github_runners.txt (temp file)

# Returns: Populates github_runners.txt with paths to suspicious runner installations

check_github_runners() {

local scan_dir=$1

print_status "$BLUE" " Checking for malicious GitHub Actions runners..."

# Performance Optimization: Single find operation with combined patterns

{

# Use pre-collected suspicious directories if available

if [[ -f "$TEMP_DIR/suspicious_dirs.txt" ]]; then

cat "$TEMP_DIR/suspicious_dirs.txt"

fi

# Single find operation combining all patterns with timeout protection

timeout 10 find "$scan_dir" -type d \( \

-name ".dev-env" -o \

-name "actions-runner" -o \

-name ".runner" -o \

-name "_work" \

\) 2>/dev/null || true

} | sort | uniq | while IFS= read -r dir; do

if [[ -d "$dir" ]]; then

# Check for runner configuration files

if [[ -f "$dir/.runner" ]] || [[ -f "$dir/.credentials" ]] || [[ -f "$dir/config.sh" ]]; then

echo "$dir:Runner configuration files found" >> "$TEMP_DIR/github_runners.txt"

fi

# Check for runner binaries

if [[ -f "$dir/Runner.Worker" ]] || [[ -f "$dir/run.sh" ]] || [[ -f "$dir/run.cmd" ]]; then

echo "$dir:Runner executable files found" >> "$TEMP_DIR/github_runners.txt"

fi

# Check for .dev-env specifically (from Koi.ai report)

if [[ "$(basename "$dir")" == ".dev-env" ]]; then

echo "$dir:Suspicious .dev-env directory (matches Koi.ai report)" >> "$TEMP_DIR/github_runners.txt"

fi

fi

done

# Also check user home directory specifically for ~/.dev-env

if [[ -d "${HOME}/.dev-env" ]]; then

echo "${HOME}/.dev-env:Malicious runner directory in home folder (Koi.ai IOC)" >> "$TEMP_DIR/github_runners.txt"

fi

}

# Function: check_destructive_patterns

# Purpose: Detect destructive patterns that can cause data loss when credential theft fails

# Args: $1 = scan_dir (directory to scan)

# Modifies: $TEMP_DIR/destructive_patterns.txt (temp file)

# Returns: Populates destructive_patterns.txt with paths to files containing destructive patterns

check_destructive_patterns() {

local scan_dir=$1

print_status "$BLUE" " Checking for destructive payload patterns..."

# Phase 3 Optimization: Pre-compile combined regex patterns for batch processing

# Basic destructive patterns - ONLY flag when targeting user directories ($HOME, ~, /home/)

# Standalone rimraf/unlinkSync/rmSync removed to reduce false positives (GitHub issue #74)

local basic_destructive_regex="rm -rf[[:space:]]+(\\\$HOME|~[^a-zA-Z0-9_/]|/home/)|del /s /q[[:space:]]+(%USERPROFILE%|\\\$HOME)|Remove-Item -Recurse[[:space:]]+(\\\$HOME|~[^a-zA-Z0-9_/])|find[[:space:]]+(\\\$HOME|~[^a-zA-Z0-9_/]|/home/).*-exec rm|find[[:space:]]+(\\\$HOME|~[^a-zA-Z0-9_/]|/home/).*-delete|\\\$HOME/[*]|~/[*]|/home/[^/]+/[*]"

# Conditional patterns for JavaScript/Python (limited span patterns)

# Note: exec.{1,30}rm limits span to avoid matching minified code where "exec" and "rm" are far apart

local js_py_conditional_regex="if.{1,200}credential.{1,50}(fail|error).{1,50}(rm -|fs\.|rimraf|exec|spawn|child_process)|if.{1,200}token.{1,50}not.{1,20}found.{1,50}(rm -|del |fs\.|rimraf|unlinkSync|rmSync)|if.{1,200}github.{1,50}auth.{1,50}fail.{1,50}(rm -|fs\.|rimraf|exec)|catch.{1,100}(rm -rf|fs\.rm|rimraf|exec.{1,30}rm)|error.{1,100}(rm -|del |fs\.|rimraf).{1,100}(\\\$HOME|~/|home.*(directory|folder|path))"

# Shell-specific patterns (broader patterns for actual shell scripts)

local shell_conditional_regex="if.*credential.*(fail|error).*rm|if.*token.*not.*found.*(delete|rm)|if.*github.*auth.*fail.*rm|catch.*rm -rf|error.*delete.*home"

# Phase 3 Optimization: Create file category lists for batch processing

cat "$TEMP_DIR/script_files.txt" "$TEMP_DIR/code_files.txt" 2>/dev/null | sort | uniq > "$TEMP_DIR/all_script_files.txt" || touch "$TEMP_DIR/all_script_files.txt"

# Separate files by type for optimized batch processing

grep -E '\.(js|py)$' "$TEMP_DIR/all_script_files.txt" > "$TEMP_DIR/js_py_files.txt" 2>/dev/null || touch "$TEMP_DIR/js_py_files.txt"

grep -E '\.(sh|bat|ps1|cmd)$' "$TEMP_DIR/all_script_files.txt" > "$TEMP_DIR/shell_files.txt" 2>/dev/null || touch "$TEMP_DIR/shell_files.txt"

# FAST: Use xargs without -I for bulk grep (much faster)

# Batch 1: Basic destructive patterns (all file types)

if [[ -s "$TEMP_DIR/all_script_files.txt" ]]; then

xargs grep -liE "$basic_destructive_regex" < "$TEMP_DIR/all_script_files.txt" 2>/dev/null | \

while IFS= read -r file; do

echo "$file:Basic destructive pattern detected" >> "$TEMP_DIR/destructive_patterns.txt"

done || true

fi

# Batch 2: JavaScript/Python conditional patterns

if [[ -s "$TEMP_DIR/js_py_files.txt" ]]; then

xargs grep -liE "$js_py_conditional_regex" < "$TEMP_DIR/js_py_files.txt" 2>/dev/null | \

while IFS= read -r file; do

echo "$file:Conditional destruction pattern detected (JS/Python context)" >> "$TEMP_DIR/destructive_patterns.txt"

done || true

fi

# Batch 3: Shell script conditional patterns

if [[ -s "$TEMP_DIR/shell_files.txt" ]]; then

xargs grep -liE "$shell_conditional_regex" < "$TEMP_DIR/shell_files.txt" 2>/dev/null | \

while IFS= read -r file; do

echo "$file:Conditional destruction pattern detected (Shell script context)" >> "$TEMP_DIR/destructive_patterns.txt"

done || true

fi

}

# Function: check_preinstall_bun_patterns

# Purpose: Detect fake Bun runtime preinstall patterns in package.json files

# Args: $1 = scan_dir (directory to scan)

# Modifies: PREINSTALL_BUN_PATTERNS (global array)

# Returns: Populates array with files containing suspicious preinstall patterns

check_preinstall_bun_patterns() {

local scan_dir=$1

print_status "$BLUE" " Checking for fake Bun preinstall patterns..."

# Look for package.json files with suspicious "preinstall": "node setup_bun.js" pattern

while IFS= read -r file; do

if [[ -f "$file" ]]; then

# Check if the file contains the malicious preinstall pattern

if grep -q '"preinstall"[[:space:]]*:[[:space:]]*"node setup_bun\.js"' "$file" 2>/dev/null; then

echo "$file" >> "$TEMP_DIR/preinstall_bun_patterns.txt"

fi

fi

# Use pre-categorized files from collect_all_files (performance optimization)

done < "$TEMP_DIR/package_files.txt"

}

# Function: check_github_actions_runner

# Purpose: Detect SHA1HULUD GitHub Actions runners in workflow files

# Args: $1 = scan_dir (directory to scan)

# Modifies: GITHUB_SHA1HULUD_RUNNERS (global array)

# Returns: Populates array with workflow files containing SHA1HULUD runner references

check_github_actions_runner() {

local scan_dir=$1

print_status "$BLUE" " Checking for SHA1HULUD GitHub Actions runners..."

# Look for workflow files containing SHA1HULUD runner names

while IFS= read -r file; do

if [[ -f "$file" ]]; then

# Check for SHA1HULUD runner references in YAML files

if grep -qi "SHA1HULUD" "$file" 2>/dev/null; then

echo "$file" >> "$TEMP_DIR/github_sha1hulud_runners.txt"

fi

fi

# Use pre-categorized files from collect_all_files (performance optimization)

done < "$TEMP_DIR/yaml_files.txt"

}

# Function: check_second_coming_repos

# Purpose: Detect repository descriptions with "Sha1-Hulud: The Second Coming" pattern

# Args: $1 = scan_dir (directory to scan)

# Modifies: SECOND_COMING_REPOS (global array)

# Returns: Populates array with git repositories matching the description pattern

check_second_coming_repos() {

local scan_dir=$1

print_status "$BLUE" " Checking for 'Second Coming' repository descriptions..."

# Performance Optimization: Use pre-collected git repositories

local git_repos_source

if [[ -f "$TEMP_DIR/git_repos.txt" ]]; then

git_repos_source="$TEMP_DIR/git_repos.txt"

else

# Fallback with timeout protection

timeout 10 find "$scan_dir" -type d -name ".git" 2>/dev/null | sed 's|/.git$||' > "$TEMP_DIR/git_repos_fallback.txt" || true

git_repos_source="$TEMP_DIR/git_repos_fallback.txt"

fi

# Check git repositories with malicious descriptions

while IFS= read -r repo_dir; do

if [[ -d "$repo_dir/.git" ]]; then

# Check git config for repository description with timeout

local description

if command -v timeout >/dev/null 2>&1; then

# GNU timeout is available

if description=$(timeout 5s git -C "$repo_dir" config --get --local --null --default "" repository.description 2>/dev/null | tr -d '\0'); then

if [[ "$description" == *"Sha1-Hulud: The Second Coming"* ]]; then

echo "$repo_dir" >> "$TEMP_DIR/second_coming_repos.txt"

fi

fi

else

# Fallback for systems without timeout command (e.g., macOS)

if description=$(git -C "$repo_dir" config --get --local --null --default "" repository.description 2>/dev/null | tr -d '\0'); then

if [[ "$description" == *"Sha1-Hulud: The Second Coming"* ]]; then

echo "$repo_dir" >> "$TEMP_DIR/second_coming_repos.txt"

fi

fi

fi

# Skip repositories where git command times out or fails

fi

done < "$git_repos_source"

}

# Function: check_file_hashes

# Purpose: Scan files and compare SHA256 hashes against known malicious hash list

# Args: $1 = scan_dir (directory to scan)

# Modifies: MALICIOUS_HASHES (global array)

# Returns: Populates MALICIOUS_HASHES array with "file:hash" entries for matches

check_file_hashes() {

local scan_dir=$1

local totalFiles

totalFiles=$(wc -l < "$TEMP_DIR/code_files.txt" 2>/dev/null || echo "0")

# FAST FILTER: Use single find command for recently modified non-node_modules files

# This is much faster than looping through every file with stat

print_status "$BLUE" " Filtering files for hash checking..."

# Priority files: recently modified (30 days) OR known malicious patterns

{

# Priority 1: Known malicious file patterns (always check)

grep -E "(setup_bun\.js|bun_environment\.js|actionsSecrets\.json|trufflehog)" "$TEMP_DIR/code_files.txt" 2>/dev/null || true

# Priority 2: Non-node_modules files (fast grep filter)

grep -v "/node_modules/" "$TEMP_DIR/code_files.txt" 2>/dev/null || true

} | sort | uniq > "$TEMP_DIR/priority_files.txt"

local filesCount

filesCount=$(wc -l < "$TEMP_DIR/priority_files.txt" 2>/dev/null || echo "0")

print_status "$BLUE" " Checking $filesCount priority files for known malicious content (filtered from $totalFiles total)..."

# BATCH HASH: Calculate all hashes in parallel using xargs

# Create hash lookup file with format: hash filename

print_status "$BLUE" " Computing hashes in parallel..."

# FIX: Use sha256sum on Linux/WSL, shasum on macOS/Git Bash

# Check if shasum actually works (not just exists in PATH)

local hash_cmd="sha256sum"

if shasum -a 256 /dev/null &>/dev/null; then

hash_cmd="shasum -a 256"

fi

xargs -P "$PARALLELISM" $hash_cmd < "$TEMP_DIR/priority_files.txt" 2>/dev/null | \

awk '{print $1, $2}' > "$TEMP_DIR/file_hashes.txt"

# Create malicious hash lookup pattern for grep

printf '%s\n' "${MALICIOUS_HASHLIST[@]}" > "$TEMP_DIR/malicious_patterns.txt"

# Fast set intersection: find matching hashes

print_status "$BLUE" " Checking against known malicious hashes..."

while IFS=' ' read -r hash file; do

if grep -qF "$hash" "$TEMP_DIR/malicious_patterns.txt" 2>/dev/null; then

echo "$file:$hash" >> "$TEMP_DIR/malicious_hashes.txt"

fi

done < "$TEMP_DIR/file_hashes.txt"

}

# Function: transform_pnpm_yaml

# Purpose: Convert pnpm-lock.yaml to pseudo-package-lock.json format for parsing

# Args: $1 = packages_file (path to pnpm-lock.yaml)

# Modifies: None

# Returns: Outputs JSON to stdout with packages structure compatible with package-lock parser

transform_pnpm_yaml() {

declare -a path

packages_file=$1

echo -e "{"

echo -e " \"packages\": {"

depth=0

while IFS= read -r line; do

# Find indentation

sep="${line%%[^ ]*}"

currentdepth="${#sep}"

# Remove surrounding whitespace

line=${line##*( )} # From the beginning

line=${line%%*( )} # From the end

# Remove comments

line=${line%%#*}

line=${line%%*( )}

# Remove comments and empty lines

if [[ "${line:0:1}" == '#' ]] || [[ "${#line}" == 0 ]]; then

continue

fi

# split into key/val

key=${line%%:*}

key=${key%%*( )}

val=${line#*:}

val=${val##*( )}

# Save current path

path[$currentdepth]=$key

# Interested in packages.*

if [ "${path[0]}" != "packages" ]; then continue; fi

if [ "${currentdepth}" != "2" ]; then continue; fi

# Remove surrounding whitespace (yes, again)

key="${key#"${key%%[![:space:]]*}"}"

key="${key%"${key##*[![:space:]]}"}"

# Remove quote

key="${key#"${key%%[!\']*}"}"

key="${key%"${key##*[!\']}"}"

# split into name/version

name=${key%\@*}

name=${name%*( )}

version=${key##*@}

version=${version##*( )}

echo " \"${name}\": {"

echo " \"version\": \"${version}\""

echo " },"

done < "$packages_file"

echo " }"

echo "}"

}

# Function: semverParseInto

# Purpose: Parse semantic version string into major, minor, patch, and special components

# Args: $1 = version_string, $2 = major_var, $3 = minor_var, $4 = patch_var, $5 = special_var

# Modifies: Sets variables named by $2-$5 using printf -v

# Returns: Populates variables with parsed version components

# Origin: https://github.com/cloudflare/semver_bash/blob/6cc9ce10/semver.sh

semverParseInto() {

local RE='[^0-9]*\([0-9]*\)[.]\([0-9]*\)[.]\([0-9]*\)\([0-9A-Za-z-]*\)'

#MAJOR

printf -v "$2" '%s' "$(echo $1 | sed -e "s/$RE/\1/")"

#MINOR

printf -v "$3" '%s' "$(echo $1 | sed -e "s/$RE/\2/")"

#PATCH

printf -v "$4" '%s' "$(echo $1 | sed -e "s/$RE/\3/")"

#SPECIAL

printf -v "$5" '%s' "$(echo $1 | sed -e "s/$RE/\4/")"

}

# Function: semver_match

# Purpose: Check if version matches semver pattern with caret (^), tilde (~), or exact matching

# Args: $1 = test_subject (version to test), $2 = test_pattern (pattern like "^1.0.0" or "~1.1.0")

# Modifies: None

# Returns: 0 for match, 1 for no match (supports || for multi-pattern matching)

# Examples: "1.1.2" matches "^1.0.0", "~1.1.0", "*" but not "^2.0.0" or "~1.2.0"

semver_match() {

local test_subject=$1

local test_pattern=$2

# Always matches

if [[ "*" == "${test_pattern}" ]]; then

return 0

fi

# Destructure subject

local subject_major=0

local subject_minor=0

local subject_patch=0

local subject_special=0

semverParseInto ${test_subject} subject_major subject_minor subject_patch subject_special

# Handle multi-variant patterns

while IFS= read -r pattern; do

pattern="${pattern#"${pattern%%[![:space:]]*}"}"

pattern="${pattern%"${pattern##*[![:space:]]}"}"

# Always matches

if [[ "*" == "${pattern}" ]]; then

return 0

fi

local pattern_major=0

local pattern_minor=0

local pattern_patch=0

local pattern_special=0

case "${pattern}" in

^*) # Major must match

semverParseInto ${pattern:1} pattern_major pattern_minor pattern_patch pattern_special

[[ "${subject_major}" == "${pattern_major}" ]] || continue

[[ "${subject_minor}" -ge "${pattern_minor}" ]] || continue

if [[ "${subject_minor}" == "${pattern_minor}" ]]; then

[[ "${subject_patch}" -ge "${pattern_patch}" ]] || continue

fi

return 0 # Match

;;

~*) # Major+minor must match

semverParseInto ${pattern:1} pattern_major pattern_minor pattern_patch pattern_special

[[ "${subject_major}" == "${pattern_major}" ]] || continue

[[ "${subject_minor}" == "${pattern_minor}" ]] || continue

[[ "${subject_patch}" -ge "${pattern_patch}" ]] || continue

return 0 # Match

;;

*[xX]*) # Wildcard pattern (4.x, 1.2.x, 4.X, 1.2.X, etc.)

# Parse pattern components, handling 'x' wildcards specially

local pattern_parts

IFS='.' read -ra pattern_parts <<< "${pattern}"

local subject_parts

IFS='.' read -ra subject_parts <<< "${test_subject}"

# Check each component, skip comparison for 'x' wildcards

for i in 0 1 2; do

if [[ ${i} -lt ${#pattern_parts[@]} && ${i} -lt ${#subject_parts[@]} ]]; then

local pattern_part="${pattern_parts[i]}"

local subject_part="${subject_parts[i]}"

# Skip wildcard components (both lowercase x and uppercase X)

if [[ "${pattern_part}" == "x" ]] || [[ "${pattern_part}" == "X" ]]; then

continue

fi

# Extract numeric part (remove any non-numeric suffix)

pattern_part=$(echo "${pattern_part}" | sed 's/[^0-9].*//')

subject_part=$(echo "${subject_part}" | sed 's/[^0-9].*//')

# Compare numeric parts

if [[ "${subject_part}" != "${pattern_part}" ]]; then

continue 2 # Continue outer loop (try next pattern)

fi

fi

done

return 0 # Match

;;

*) # Exact match

semverParseInto ${pattern} pattern_major pattern_minor pattern_patch pattern_special

[[ "${subject_major}" -eq "${pattern_major}" ]] || continue

[[ "${subject_minor}" -eq "${pattern_minor}" ]] || continue

[[ "${subject_patch}" -eq "${pattern_patch}" ]] || continue

[[ "${subject_special}" == "${pattern_special}" ]] || continue

return 0 # MATCH

;;

esac

# Splits '||' into newlines with sed

done < <(echo "${test_pattern}" | sed 's/||/\n/g')

# Fallthrough = no match

return 1;

}

# Function: check_packages

# Purpose: Scan package.json files for compromised packages and suspicious namespaces

# Args: $1 = scan_dir (directory to scan)

# Modifies: COMPROMISED_FOUND, SUSPICIOUS_FOUND, NAMESPACE_WARNINGS (global arrays)

# Returns: Populates arrays with matches using exact and semver pattern matching

check_packages() {

local scan_dir=$1

local filesCount

filesCount=$(wc -l < "$TEMP_DIR/package_files.txt" 2>/dev/null || echo "0")

print_status "$BLUE" " Checking $filesCount package.json files for compromised packages..."

# BATCH OPTIMIZATION: Extract all deps using parallel processing

print_status "$BLUE" " Extracting dependencies from all package.json files..."

# Create optimized lookup table from compromised packages (sorted for join)

awk -F: '{print $1":"$2}' $SCRIPT_DIR/compromised-packages.txt | LC_ALL=C sort > "$TEMP_DIR/compromised_lookup.txt"

# Extract all dependencies from all package.json files using parallel xargs + awk

# Format: file_path|package_name:version

# Use awk to parse JSON dependencies - portable and fast

xargs -P "$PARALLELISM" -I {} awk -v file="{}" '

/"dependencies":|"devDependencies":/ {flag=1; next}

/^[[:space:]]*\}/ {flag=0}

flag && /^[[:space:]]*"[^"]+":/ {

# Extract "package": "version"

gsub(/^[[:space:]]*"/, "")

gsub(/":[[:space:]]*"/, ":")

gsub(/".*$/, "")

if (length($0) > 0 && index($0, ":") > 0) {

print file "|" $0

}

}

' {} < "$TEMP_DIR/package_files.txt" > "$TEMP_DIR/all_deps.txt" 2>/dev/null

# FAST SET INTERSECTION: Use awk hash lookup instead of grep per line

print_status "$BLUE" " Checking dependencies against compromised list..."

local depCount=$(wc -l < "$TEMP_DIR/all_deps.txt" 2>/dev/null || echo "0")

print_status "$BLUE" " Found $depCount total dependencies to check"

# Create sorted deps file for set intersection

cut -d'|' -f2 "$TEMP_DIR/all_deps.txt" | LC_ALL=C sort | uniq > "$TEMP_DIR/deps_only.txt"

# Find matching deps using comm (set intersection - super fast)

# FIX: Use LC_ALL=C to ensure comm uses the same sort order as sort (Git Bash compatibility)

LC_ALL=C comm -12 "$TEMP_DIR/compromised_lookup.txt" "$TEMP_DIR/deps_only.txt" > "$TEMP_DIR/matched_deps.txt"

# If matches found, map back to file paths

if [[ -s "$TEMP_DIR/matched_deps.txt" ]]; then

while IFS= read -r matched_dep; do

{ grep -F "|$matched_dep" "$TEMP_DIR/all_deps.txt" || true; } | while IFS='|' read -r file_path dep; do

[[ -n "$file_path" ]] && echo "$file_path:${dep/:/@}" >> "$TEMP_DIR/compromised_found.txt"

done

done < "$TEMP_DIR/matched_deps.txt"

fi

# Check for suspicious namespaces - simplified for speed

print_status "$BLUE" " Checking for compromised namespaces..."

# Quick check: just look in the already-extracted dependencies file

# This is much faster than re-reading all package.json files

for namespace in "${COMPROMISED_NAMESPACES[@]}"; do

# Check if any dependency starts with this namespace

if grep -q "|$namespace/" "$TEMP_DIR/all_deps.txt" 2>/dev/null; then

{ grep "|$namespace/" "$TEMP_DIR/all_deps.txt" || true; } | cut -d'|' -f1 | sort | uniq | while read -r file; do

[[ -n "$file" ]] && echo "$file:Contains packages from compromised namespace: $namespace" >> "$TEMP_DIR/namespace_warnings.txt"

done

fi

done

echo -ne "\r\033[K"

}

# Function: check_postinstall_hooks

# Purpose: Detect suspicious postinstall scripts that may execute malicious code

# Args: $1 = scan_dir (directory to scan)

# Modifies: POSTINSTALL_HOOKS (global array)

# Returns: Populates POSTINSTALL_HOOKS array with package.json files containing hooks

check_postinstall_hooks() {

local scan_dir=$1

print_status "$BLUE" " Checking for suspicious postinstall hooks..."

while IFS= read -r -d '' package_file; do

if [[ -f "$package_file" && -r "$package_file" ]]; then

# Look for postinstall scripts

if grep -q "\"postinstall\"" "$package_file" 2>/dev/null; then

local postinstall_cmd

postinstall_cmd=$(grep -A1 "\"postinstall\"" "$package_file" 2>/dev/null | grep -o '"[^"]*"' 2>/dev/null | tail -1 2>/dev/null | tr -d '"' 2>/dev/null || true) || true

# Check for suspicious patterns in postinstall commands

if [[ -n "$postinstall_cmd" ]] && ([[ "$postinstall_cmd" == *"curl"* ]] || [[ "$postinstall_cmd" == *"wget"* ]] || [[ "$postinstall_cmd" == *"node -e"* ]] || [[ "$postinstall_cmd" == *"eval"* ]]); then

echo "$package_file:Suspicious postinstall: $postinstall_cmd" >> "$TEMP_DIR/postinstall_hooks.txt"

fi

fi

fi

# Use pre-categorized files from collect_all_files (performance optimization)

done < <(tr '\n' '\0' < "$TEMP_DIR/package_files.txt")

}

# Function: check_content

# Purpose: Search for suspicious content patterns like webhook.site and malicious endpoints

# Args: $1 = scan_dir (directory to scan)

# Modifies: SUSPICIOUS_CONTENT (global array)

# Returns: Populates SUSPICIOUS_CONTENT array with files containing suspicious patterns

check_content() {

local scan_dir=$1

print_status "$BLUE" " Checking for suspicious content patterns..."

# FAST: Use xargs with grep -l for bulk searching instead of per-file grep

# Search for webhook.site references

{

xargs grep -l "webhook\.site" < <(cat "$TEMP_DIR/code_files.txt" "$TEMP_DIR/yaml_files.txt" 2>/dev/null) 2>/dev/null || true

} | while read -r file; do

[[ -n "$file" ]] && echo "$file:webhook.site reference" >> "$TEMP_DIR/suspicious_content.txt"

done

# Search for malicious webhook endpoint

{

xargs grep -l "bb8ca5f6-4175-45d2-b042-fc9ebb8170b7" < <(cat "$TEMP_DIR/code_files.txt" "$TEMP_DIR/yaml_files.txt" 2>/dev/null) 2>/dev/null || true

} | while read -r file; do

[[ -n "$file" ]] && echo "$file:malicious webhook endpoint" >> "$TEMP_DIR/suspicious_content.txt"

done

}

# Function: check_crypto_theft_patterns

# Purpose: Detect cryptocurrency theft patterns from the Chalk/Debug attack (Sept 8, 2025)

# Args: $1 = scan_dir (directory to scan)

# Modifies: CRYPTO_PATTERNS, HIGH_RISK_CRYPTO (global arrays)

# Returns: Populates arrays with wallet hijacking, XMLHttpRequest tampering, and attacker indicators

check_crypto_theft_patterns() {

local scan_dir=$1

print_status "$BLUE" " Checking for cryptocurrency theft patterns..."

# FAST: Use xargs with grep -l for bulk pattern searching

# Check for specific malicious functions from chalk/debug attack (highest priority)

{

xargs grep -lE "checkethereumw|runmask|newdlocal|_0x19ca67" < "$TEMP_DIR/code_files.txt" 2>/dev/null || true

} | while read -r file; do

[[ -n "$file" ]] && echo "$file:Known crypto theft function names detected" >> "$TEMP_DIR/crypto_patterns.txt"

done

# Check for known attacker wallets (high priority)

{

xargs grep -lE "0xFc4a4858bafef54D1b1d7697bfb5c52F4c166976|1H13VnQJKtT4HjD5ZFKaaiZEetMbG7nDHx|TB9emsCq6fQw6wRk4HBxxNnU6Hwt1DnV67" < "$TEMP_DIR/code_files.txt" 2>/dev/null || true

} | while read -r file; do

[[ -n "$file" ]] && echo "$file:Known attacker wallet address detected - HIGH RISK" >> "$TEMP_DIR/crypto_patterns.txt"

done

# Check for npmjs.help phishing domain

{

xargs grep -l "npmjs\.help" < "$TEMP_DIR/code_files.txt" 2>/dev/null || true

} | while read -r file; do

[[ -n "$file" ]] && echo "$file:Phishing domain npmjs.help detected" >> "$TEMP_DIR/crypto_patterns.txt"

done

# Check for XMLHttpRequest hijacking (medium priority - filter out framework code)

{

xargs grep -l "XMLHttpRequest\.prototype\.send" < "$TEMP_DIR/code_files.txt" 2>/dev/null || true

} | while read -r file; do

[[ -z "$file" ]] && continue

if [[ "$file" == *"/react-native/Libraries/Network/"* ]] || [[ "$file" == *"/next/dist/compiled/"* ]]; then

# Framework code - check for crypto patterns too

if grep -qE "0x[a-fA-F0-9]{40}|checkethereumw|runmask|webhook\.site|npmjs\.help" "$file" 2>/dev/null; then

echo "$file:XMLHttpRequest prototype modification with crypto patterns detected - HIGH RISK" >> "$TEMP_DIR/crypto_patterns.txt"

else

echo "$file:XMLHttpRequest prototype modification detected in framework code - LOW RISK" >> "$TEMP_DIR/crypto_patterns.txt"

fi

else

if grep -qE "0x[a-fA-F0-9]{40}|checkethereumw|runmask|webhook\.site|npmjs\.help" "$file" 2>/dev/null; then

echo "$file:XMLHttpRequest prototype modification with crypto patterns detected - HIGH RISK" >> "$TEMP_DIR/crypto_patterns.txt"

else

echo "$file:XMLHttpRequest prototype modification detected - MEDIUM RISK" >> "$TEMP_DIR/crypto_patterns.txt"

fi

fi

done

# Check for javascript obfuscation

{

xargs grep -l "javascript-obfuscator" < "$TEMP_DIR/code_files.txt" 2>/dev/null || true

} | while read -r file; do

[[ -n "$file" ]] && echo "$file:JavaScript obfuscation detected" >> "$TEMP_DIR/crypto_patterns.txt"

done

# Check for generic Ethereum wallet address patterns (MEDIUM priority)

# Files with 0x addresses AND crypto-related keywords

{

xargs grep -lE "0x[a-fA-F0-9]{40}" < "$TEMP_DIR/code_files.txt" 2>/dev/null || true

} | while read -r file; do

[[ -z "$file" ]] && continue

# Skip if already flagged as HIGH RISK

if grep -qF "$file:" "$TEMP_DIR/crypto_patterns.txt" 2>/dev/null; then

continue

fi

# Check for crypto-related context keywords

if grep -qE "ethereum|wallet|address|crypto" "$file" 2>/dev/null; then

echo "$file:Ethereum wallet address patterns detected" >> "$TEMP_DIR/crypto_patterns.txt"

fi

done

}

# Function: check_git_branches

# Purpose: Search for suspicious git branches containing "shai-hulud" in their names

# Args: $1 = scan_dir (directory to scan)

# Modifies: GIT_BRANCHES (global array)

# Returns: Populates GIT_BRANCHES array with branch names and commit hashes

check_git_branches() {

local scan_dir=$1

print_status "$BLUE" " Checking for suspicious git branches..."

# Performance Optimization: Use pre-collected git repositories and limit search scope

if [[ -f "$TEMP_DIR/git_repos.txt" ]]; then

while IFS= read -r repo_dir; do

if [[ -d "$repo_dir/.git/refs/heads" ]]; then

# Quick check: only look for shai-hulud patterns in branch names

local git_refs_dir="$repo_dir/.git/refs/heads"

if [[ -d "$git_refs_dir" ]]; then

# Use shell globbing instead of find for better performance

for branch_file in "$git_refs_dir"/*shai-hulud* "$git_refs_dir"/*shai*hulud*; do

if [[ -f "$branch_file" ]]; then

local branch_name

branch_name=$(basename "$branch_file")

local commit_hash

commit_hash=$(cat "$branch_file" 2>/dev/null || echo "unknown")

echo "$repo_dir:Branch '$branch_name' (commit: ${commit_hash:0:8}...)" >> "$TEMP_DIR/git_branches.txt"

fi

done

fi

fi

done < "$TEMP_DIR/git_repos.txt"

else

# Fallback: quick search with timeout to prevent hanging

timeout 5 find "$scan_dir" -name ".git" -type d 2>/dev/null | head -20 | while IFS= read -r git_dir; do

local repo_dir

repo_dir=$(dirname "$git_dir")

if [[ -d "$git_dir/refs/heads" ]]; then

# Quick check only

for branch_file in "$git_dir/refs/heads"/*shai-hulud*; do

if [[ -f "$branch_file" ]]; then

local branch_name

branch_name=$(basename "$branch_file")

echo "$repo_dir:Branch '$branch_name'" >> "$TEMP_DIR/git_branches.txt"

fi

done

fi

done || true # Don't fail if timeout occurs

fi

}

# Function: get_file_context

# Purpose: Classify file context for risk assessment (node_modules, source, build, etc.)

# Args: $1 = file_path (path to file)

# Modifies: None

# Returns: Echoes context string (node_modules, documentation, type_definitions, build_output, configuration, source_code)

get_file_context() {

local file_path=$1

# Check if file is in node_modules

if [[ "$file_path" == *"/node_modules/"* ]]; then

echo "node_modules"

return

fi

# Check if file is documentation

if [[ "$file_path" == *".md" ]] || [[ "$file_path" == *".txt" ]] || [[ "$file_path" == *".rst" ]]; then

echo "documentation"

return

fi

# Check if file is TypeScript definitions

if [[ "$file_path" == *".d.ts" ]]; then

echo "type_definitions"

return

fi

# Check if file is in build/dist directories

if [[ "$file_path" == *"/dist/"* ]] || [[ "$file_path" == *"/build/"* ]] || [[ "$file_path" == *"/public/"* ]]; then

echo "build_output"

return

fi

# Check if it's a config file

if [[ "$(basename "$file_path")" == *"config"* ]] || [[ "$(basename "$file_path")" == *".config."* ]]; then

echo "configuration"

return

fi

echo "source_code"

}

# Function: is_legitimate_pattern

# Purpose: Identify legitimate framework/build tool patterns to reduce false positives

# Args: $1 = file_path, $2 = content_sample (text snippet from file)

# Modifies: None

# Returns: 0 for legitimate, 1 for potentially suspicious

is_legitimate_pattern() {

local file_path=$1

local content_sample="$2"

# Vue.js development patterns

if [[ "$content_sample" == *"process.env.NODE_ENV"* ]] && [[ "$content_sample" == *"production"* ]]; then

return 0 # legitimate

fi

# Common framework patterns

if [[ "$content_sample" == *"createApp"* ]] || [[ "$content_sample" == *"Vue"* ]]; then

return 0 # legitimate

fi

# Package manager and build tool patterns

if [[ "$content_sample" == *"webpack"* ]] || [[ "$content_sample" == *"vite"* ]] || [[ "$content_sample" == *"rollup"* ]]; then

return 0 # legitimate

fi

return 1 # potentially suspicious

}

# Function: get_lockfile_version

# Purpose: Extract actual installed version from lockfile for a specific package

# Args: $1 = package_name, $2 = package_json_dir (directory containing package.json), $3 = scan_boundary (original scan directory)

# Modifies: None

# Returns: Echoes installed version or empty string if not found

get_lockfile_version() {

local package_name="$1"

local package_dir="$2"

local scan_boundary="$3"

# Search upward for lockfiles (supports packages in node_modules subdirectories)

local current_dir="$package_dir"

# Traverse up the directory tree until we find a lockfile, reach root, or hit scan boundary

while [[ "$current_dir" != "/" && "$current_dir" != "." && -n "$current_dir" ]]; do

# SECURITY: Don't search above the original scan directory boundary

if [[ ! "$current_dir/" =~ ^"$scan_boundary"/ && "$current_dir" != "$scan_boundary" ]]; then

break

fi

# Check for package-lock.json first (most common)

if [[ -f "$current_dir/package-lock.json" ]]; then

# Use the existing logic from check_package_integrity for block-based parsing

local found_version

found_version=$(awk -v pkg="node_modules/$package_name" '

$0 ~ "\"" pkg "\":" { in_block=1; brace_count=1 }

in_block && /\{/ && !($0 ~ "\"" pkg "\":") { brace_count++ }

in_block && /\}/ {

brace_count--

if (brace_count <= 0) { in_block=0 }

}

in_block && /\s*"version":/ {

# Extract version value between quotes

split($0, parts, "\"")

for (i in parts) {

if (parts[i] ~ /^[0-9]/) {

print parts[i]

exit

}

}

}

' "$current_dir/package-lock.json" 2>/dev/null || true)

if [[ -n "$found_version" ]]; then

echo "$found_version"

return

fi

fi

# Check for yarn.lock

if [[ -f "$current_dir/yarn.lock" ]]; then

# Yarn.lock format: package-name@version:

local found_version

found_version=$(grep "^\"\\?$package_name@" "$current_dir/yarn.lock" 2>/dev/null | head -1 | sed 's/.*@\([^"]*\).*/\1/' 2>/dev/null || true)

if [[ -n "$found_version" ]]; then

echo "$found_version"

return

fi

fi

# Check for pnpm-lock.yaml

if [[ -f "$current_dir/pnpm-lock.yaml" ]]; then

# Use transform_pnpm_yaml and then parse like package-lock.json

local temp_lockfile

temp_lockfile=$(mktemp "${TMPDIR:-/tmp}/pnpm-parse.XXXXXXXX")

TEMP_FILES+=("$temp_lockfile")

transform_pnpm_yaml "$current_dir/pnpm-lock.yaml" > "$temp_lockfile" 2>/dev/null

local found_version

found_version=$(awk -v pkg="$package_name" '

$0 ~ "\"" pkg "\"" { in_block=1; brace_count=1 }

in_block && /\{/ && !($0 ~ "\"" pkg "\"") { brace_count++ }

in_block && /\}/ {

brace_count--

if (brace_count <= 0) { in_block=0 }

}

in_block && /\s*"version":/ {

gsub(/.*"version":\s*"/, "")

gsub(/".*/, "")

print $0

exit

}

' "$temp_lockfile" 2>/dev/null || true)

if [[ -n "$found_version" ]]; then

echo "$found_version"

return

fi

fi

# Move to parent directory

current_dir=$(dirname "$current_dir")

done

# No lockfile or package not found

echo ""

}

# Function: check_trufflehog_activity

# Purpose: Detect Trufflehog secret scanning activity with context-aware risk assessment

# Args: $1 = scan_dir (directory to scan)

# Modifies: TRUFFLEHOG_ACTIVITY (global array)

# Returns: Populates TRUFFLEHOG_ACTIVITY array with risk level (HIGH/MEDIUM/LOW) prefixes

check_trufflehog_activity() {

local scan_dir=$1

print_status "$BLUE" " Checking for Trufflehog activity and secret scanning..."

# Look for trufflehog binary files (always HIGH RISK)

while IFS= read -r binary_file; do

if [[ -f "$binary_file" ]]; then

echo "$binary_file:HIGH:Trufflehog binary found" >> "$TEMP_DIR/trufflehog_activity.txt"

fi

done < "$TEMP_DIR/trufflehog_files.txt"

# Combine script and code files for scanning

cat "$TEMP_DIR/script_files.txt" "$TEMP_DIR/code_files.txt" 2>/dev/null | sort -u > "$TEMP_DIR/trufflehog_scan_files.txt"

# HIGH PRIORITY: Dynamic TruffleHog download patterns (November 2025 attack)

{ xargs grep -lE "curl.*trufflehog|wget.*trufflehog|bunExecutable.*trufflehog|download.*trufflehog" \

< "$TEMP_DIR/trufflehog_scan_files.txt" 2>/dev/null || true; } | while read -r file; do

[[ -n "$file" ]] && echo "$file:HIGH:November 2025 pattern - Dynamic TruffleHog download via curl/wget/Bun" >> "$TEMP_DIR/trufflehog_activity.txt"

done

# HIGH PRIORITY: TruffleHog credential harvesting patterns

{ xargs grep -lE "TruffleHog.*scan.*credential|trufflehog.*env|trufflehog.*AWS|trufflehog.*NPM_TOKEN" \

< "$TEMP_DIR/trufflehog_scan_files.txt" 2>/dev/null || true; } | while read -r file; do

[[ -n "$file" ]] && echo "$file:HIGH:TruffleHog credential scanning pattern detected" >> "$TEMP_DIR/trufflehog_activity.txt"

done

# HIGH PRIORITY: Credential patterns with exfiltration indicators

{ xargs grep -lE "(AWS_ACCESS_KEY|GITHUB_TOKEN|NPM_TOKEN).*(webhook\.site|curl|https\.request)" \

< "$TEMP_DIR/trufflehog_scan_files.txt" 2>/dev/null || true; } | \

{ grep -v "/node_modules/\|\.d\.ts$" || true; } | while read -r file; do

[[ -n "$file" ]] && echo "$file:HIGH:Credential patterns with potential exfiltration" >> "$TEMP_DIR/trufflehog_activity.txt"

done

# MEDIUM PRIORITY: Trufflehog references in source code (not node_modules/docs)

{ xargs grep -l "trufflehog\|TruffleHog" \

< "$TEMP_DIR/trufflehog_scan_files.txt" 2>/dev/null || true; } | \

{ grep -v "/node_modules/\|\.md$\|/docs/\|\.d\.ts$" || true; } | while read -r file; do

# Check if already flagged as HIGH